Beginnings of Linear Regression

I don't know about you, but the phrase "linear regression" felt like some extremely complex and out of reach concept within AI. That was until I began reading the book, Deep Learning, by John D. Kelleher.

The book is a part of a larger collection called The MIT Press Essential Knowledge Series, and even though I have only read three quarters of the book, I couldn't recommend the series more. It is the perfect collection for learners, focusing on diversity of topics and simple introductions. The series includes everything from neurolinguistics to hunting, so I am certain you will find something that piques your interest.

Blabbering aside, Deep Learning introduced me to the most basic concepts behind machine learning, one of which being linear regression. The purpose of the procedure is to find a line of best fit between the variables in a dataset. When working with a machine learning algorithm, programs are trained on large, complex datasets that seemingly have no pattern. Linear regression simply aims to extract patterns and meaningful insights from chaotic data, uncovering relationships that may not be visible at first glance.

In its simplest form, linear regression predicts a singular predictor variable. It is derived from the linear equation of a line:

$$ Y = \beta_0 + \beta_1X $$

Where:

$\quad\beta_0$ = y-intercept

$\quad\beta_1$ = slope

With this in mind, your goal with linear regression is to find the line of best fit, from a set of data. Meaning, we must now find a slope and intercept that best represents the raw data. To find these values, we first need a few things.

The first of which being $ \bar{X}\ and\ \bar{Y} $, which are calculated using the following equation:

$$ \bar{Q}\ =\ \frac 1n \ \sum_{i=1}^n \bar{Q}_i $$

With that, we are now able to calculate the slope$(\beta_1)$. Representing the change in Y for every unit change of X, it is calculated using the following equation:

$$ \beta_1\ =\ \frac { cov(X,Y)} {var(X)} $$

Where:

$ \quad cov(X,Y)\ =\ \sum_{i=1}^n{(X_i - \bar{X})(Y_i - \bar{Y})}$

$ \quad var(X)\ =\ \sum_{i=1}^n{(X_i - \bar{X})^2}$

With the slope out of the way, we are just missing the y-intercept($\beta_0$). Luckily, this one is simple to calculate:

$$ \beta_0\ =\ \bar{Y} - \beta_1\bar{X} $$

With all that, we’ve got our mise en place. The path may seem daunting at first, but if you set aside the fancy Greek letters and symbols and focus on what the equations actually represent, things become a lot smoother. Now, bringing it all together, we can finally piece together the once-simple… yet still simple equation:

\[ Y = \beta_0 + \beta_1X \]

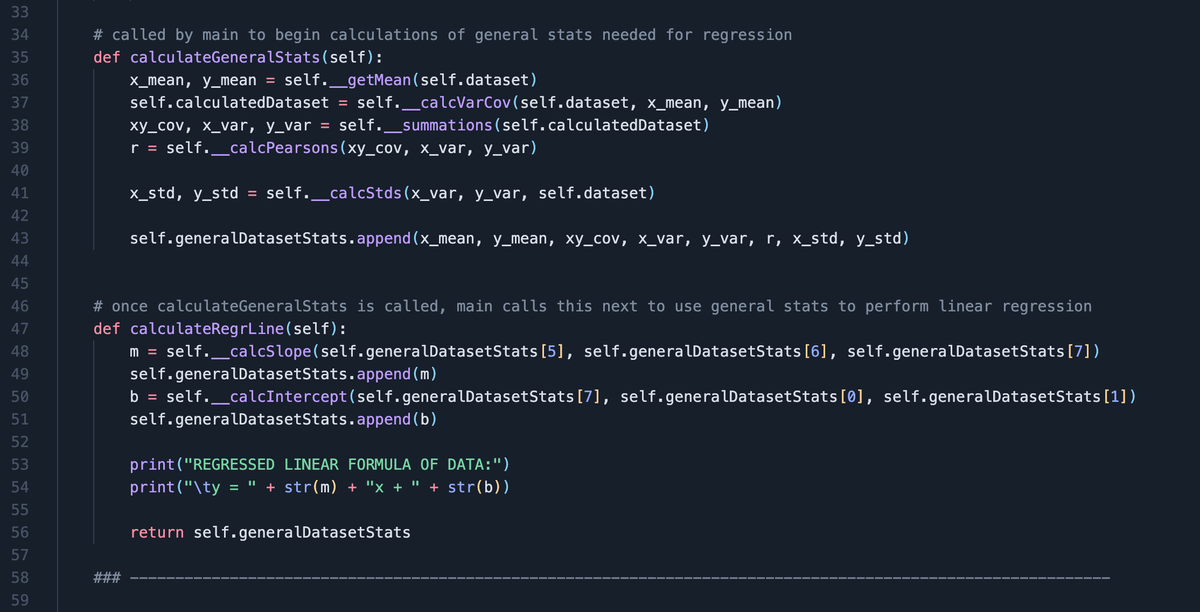

After reading through this concept and a few more chapters in the book, I wanted to start building something that would help me understand it fully. You can check out the code here:

I know it is a very simple algorithm, but it is important that I build a strong foundation before diving any deeper. Realistically, I probably won't need to write this procedure again, thanks to industry tools and resources. However, I'm hoping that by understanding it at this level, I will gain a competitive edge in the industry.

I plan on continually adding to this repo as I read and discover more about machine learning in the future.

Thank you all for reading once again. I know it has been a while since I last posted, but I started school again and so the grind begins.

I hope to write to you all soon!

~ Cyrus Foroudian